High Performance Computing (HPC) consists of two main types

of computing platforms. There are shared memory platforms that run a single

operating system and act as a single computer, where each processor in the

system has access to all of the memory. The largest of these available on the

market today are Atos’ BullSequana S1600 and HPE’s Superdome, which max out at

16-processors sockets and 24TB of memory. Coming out later this year, the

BullSequana S3200 will supply 32-processor sockets and 48TB of memory.

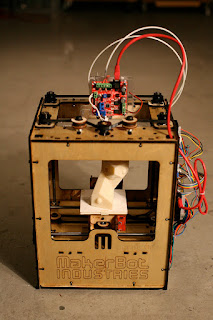

The other type of HPC is called a distributed memory system

and it links multiple computers together by a software stack that allows the

separate systems to pass memory from one to another, utilizing a message

passing library. These systems first came about to replace the expensive shared

memory systems with commodity computer hardware, like your laptop or desktop

computer. Standards for how to share the memory through message passing were

first developed about three decades ago and formed a new computing industry.

These systems made a shift from commodity hardware to specialized platforms

about twenty years ago with companies like Cray, Bull (now a part of Atos), and

SGI leading the pack.

Today the main specialized hardware manufactures are Atos

with their Direct Liquid Cooled Sequana XH2000 and HPE with a conglomerate of

technologies from the acquisition of both SGI and Cray in the last few years.

It is unclear in the industry which product line will be kept through the

mergers. HPC used to be purely a scientific research platform used by the likes

of NASA and university research programs, but in recent years it has made a

shift to being used in nearly every industry, from movies, fashion and

toiletries to planes, trains, and automobiles.

The newest use cases for HPC today are in data analytics,

machine learning, and artificial intelligence. However, I would say the leading

use case for HPC worldwide is still in the fields of computational chemistry

and computational fluid dynamics. Computational fluid dynamics studies how

fluid or material move or flow through mechanical systems. It is used to model

things like how a detergent pours out of the detergent bottle, how a diaper

absorbs liquids, and how air flows through a jet turbine for optimal

performance. Computational chemistry uses computers to simulate molecular

structures and chemical reaction processes, effectively replacing large

chemical testing laboratories by large computing platforms.

The two latest innovations that are causing a shift in HPC

are cloud computing, Google Cloud Services, Amazon Web Service, Microsoft

Azure, and Quantum Computing, which is the next generation of computers that

are still under development and are not likely to be easily available for ten

years or more.

If you are interested in learning more about HPC, there are

a couple of great places to start. The University of Oklahoma hosts a conference

that is free and open to the public every September. This year the event is

being held on Sept. 24 and 25; more information about the event can be found at

http://www.oscer.ou.edu/Symposium2019/agenda.html. There is also professional

training available from the Linux Cluster Institute (http://www.linuxclustersinstitute.org).

Scott Hamilton, Senior Expert in HPC at Atos has also published a book on the

Message Passing Interface designed for beginners in the field. It is available

on Amazon through his author page (http://amazon.com/author/techshepherd).

There are also several free resources online by searching for HPC or MPI.